Sequence

When we sequence things, we arrange them in a particular order. Sequence-based algorithms are made from a precise set of instructions. For example:

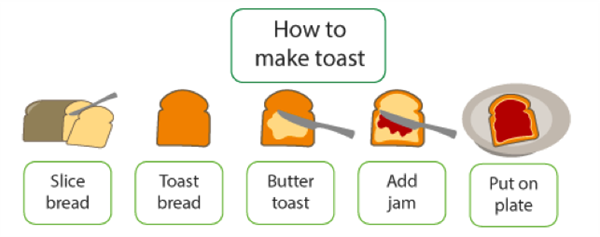

A sequence of instructions - an algorithm - for how to make toast.

Similarly, computer programs are built up of precise sequences of unambiguous instructions. When pupils start working with floor turtles, they build their programs as simple sequences of button presses. Each press stores a specific command: when the program is run, each command in turn triggers specific signals to the motors driving the wheels and the turtle moves accordingly. Pupils’ first Scratch programs are also likely to be simple sequences of instructions. Again, these need to be precise and unambiguous, and of course the order of the instructions matters. In developing their algorithms, pupils will have had to work out exactly what order to put the steps in to complete a task.

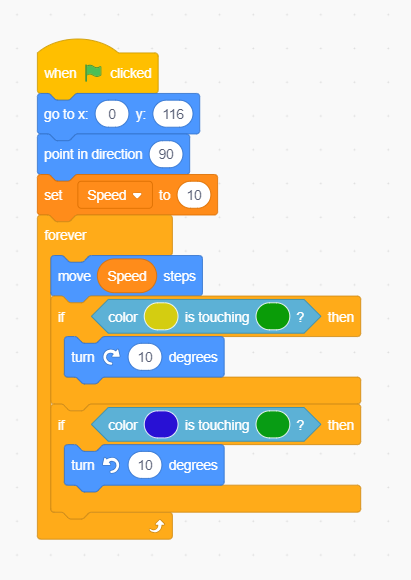

A program which children might create in Scratch.

Why is sequence important?

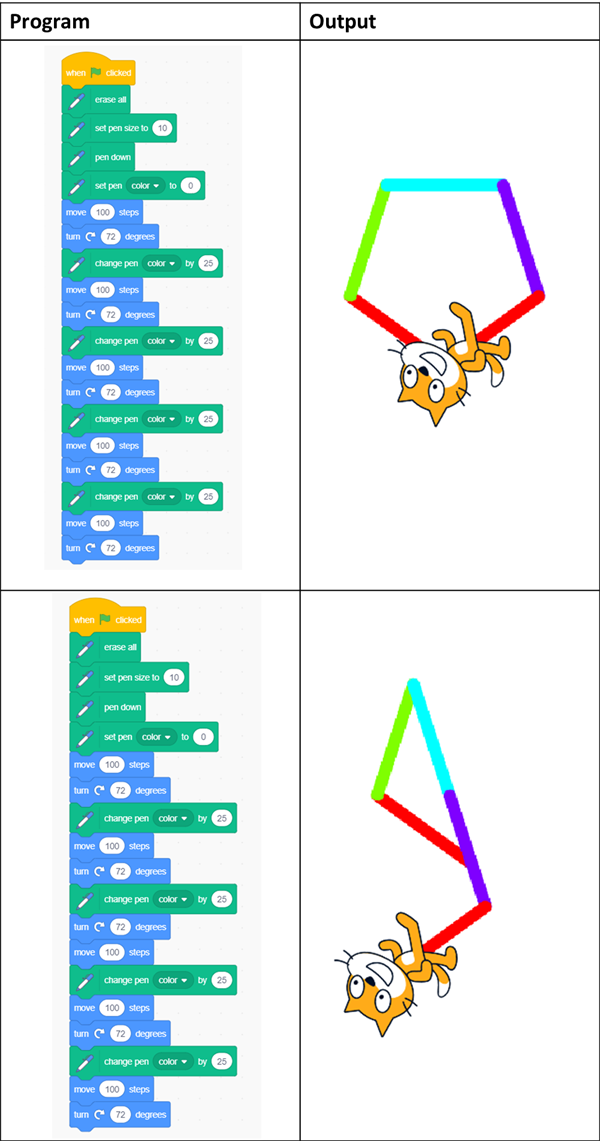

In sequence-based algorithms, such as a recipe to bake a cake, the order of many (though not all) of the steps is crucial. Similarly, most programming languages are based on a series of statements to sequentially process. The outcome of a program will depend not only on the constituent commands but their order. The importance of sequence in the output of a Scratch program is illustrated below. In this table, the program in the second row has had just two commands swapped in its sequence; however, as a result, the output is quite different:

Two programs in Scratch, constructed from commands which are identical but which are sequenced differently. The second has two commands swapped in its sequence, producing a different output as a result.

This has been a fundamental principle of computer science since one of the first mechanical computers was designed by Charles Babbage approximately 300 years ago. Modern-day digital computers use sequence in a range of ways. The order of our keystrokes on the keyboard is a sequence. The press of a key is itself translated into a representative sequence of code within the computer.

A photograph of Charles Babbage.